Objectives

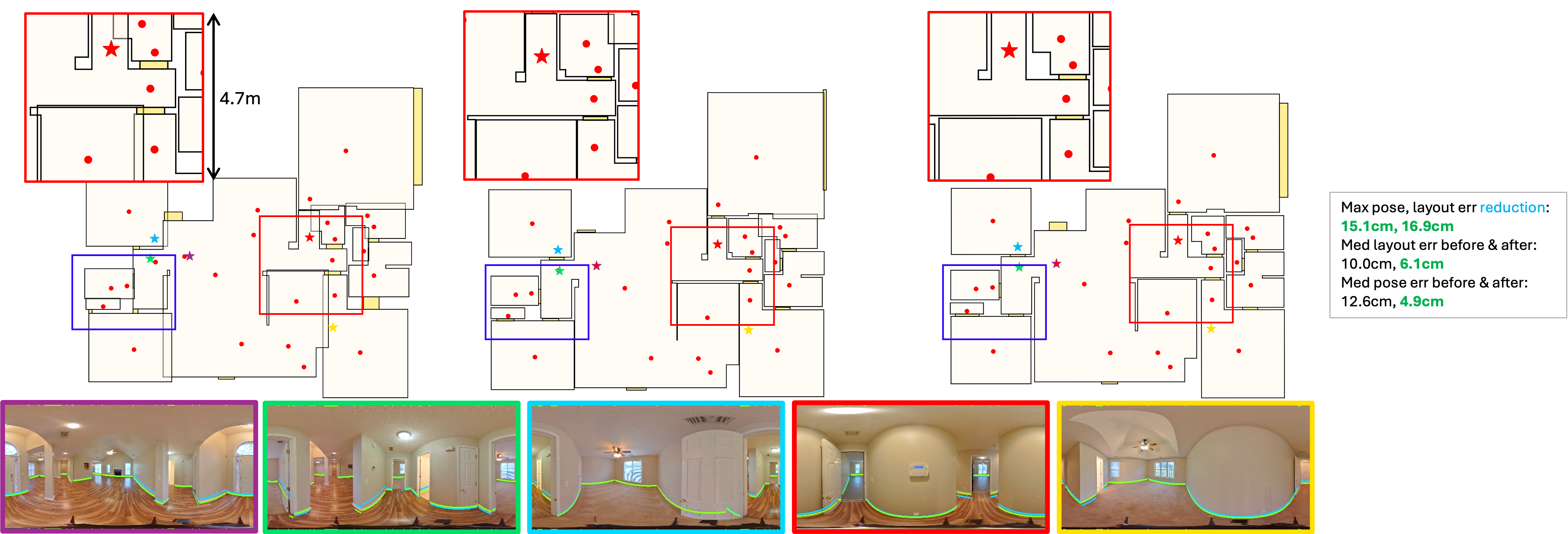

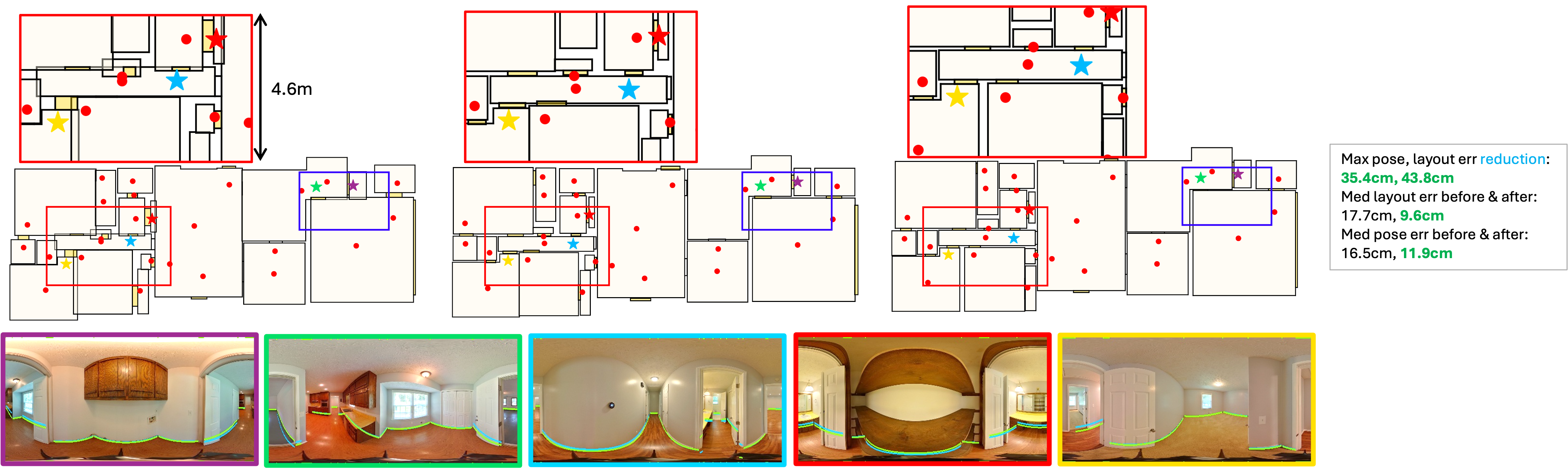

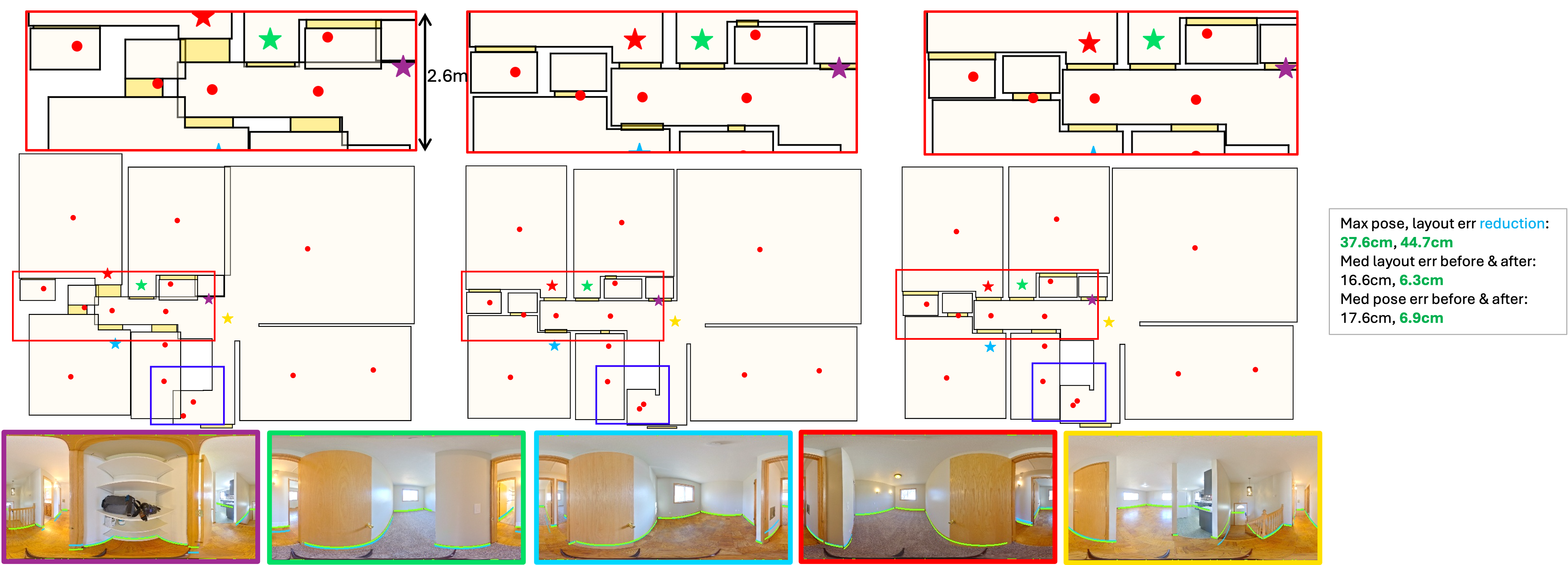

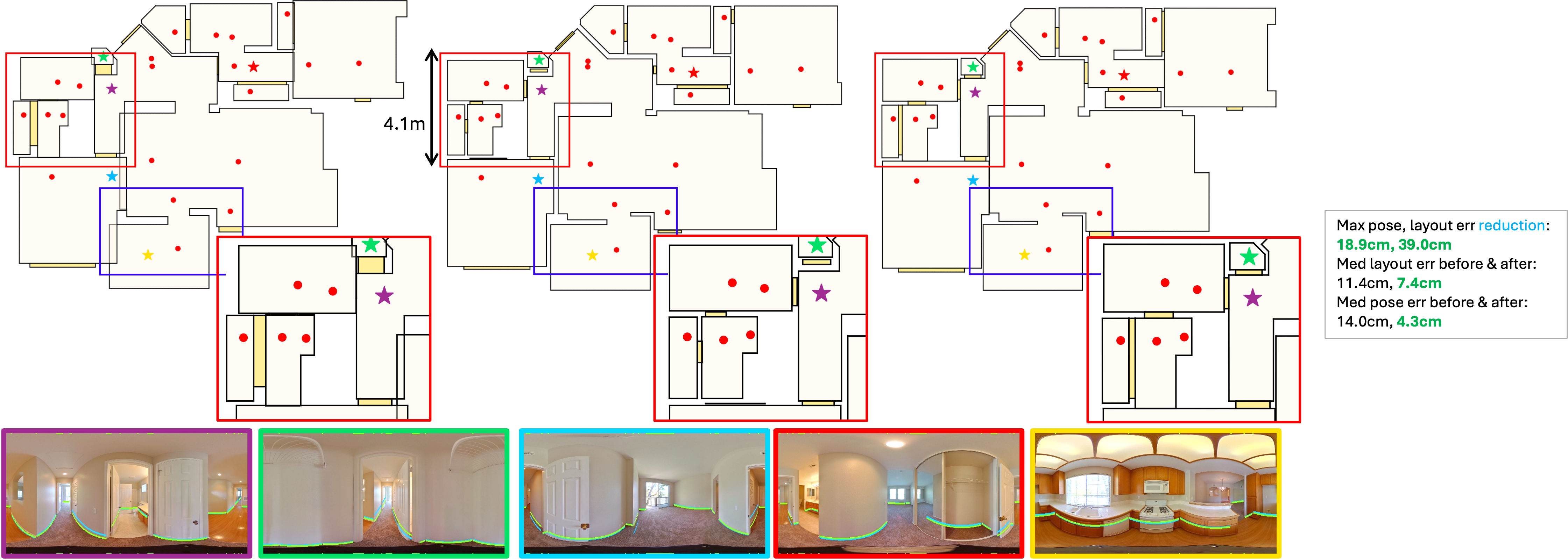

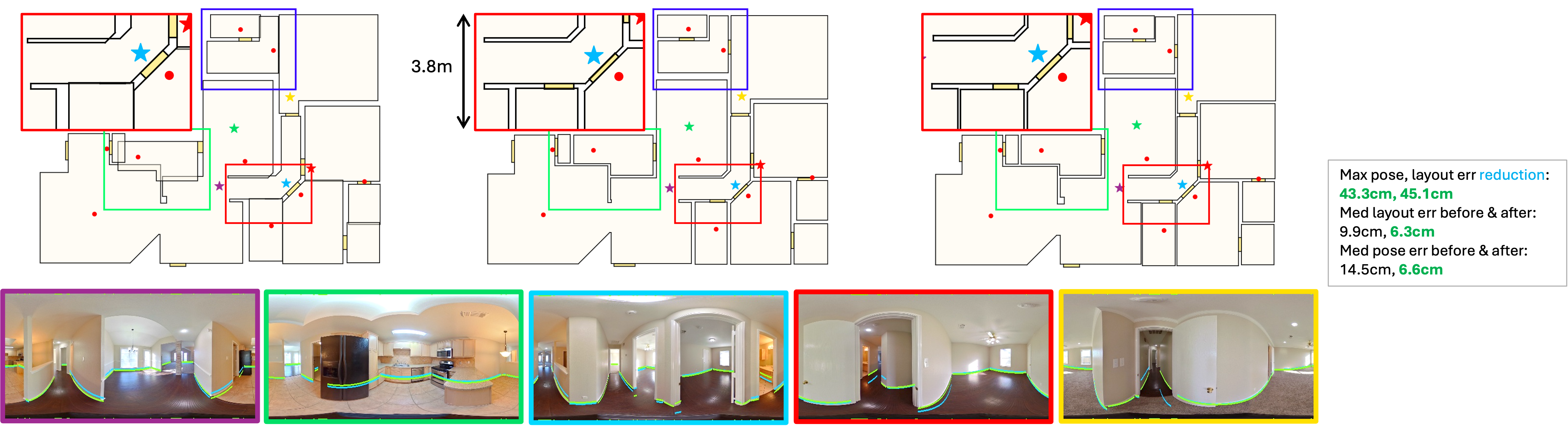

BADGR performs both bundle adjustment (BA) optimization and layout inpainting tasks, to refine camera poses and layouts from a given coarse state using 1D floor boundary information from up-to-30 or more images of varying input densities. The learned layout-structural constraints, such as wall adjacency, collinearity, help BADGR to 1) constrain pose graph, 2) learn to mitigate errors from inputs, and 3) inpaint occluded layouts.

Framework

BADGR is a layout diffusion model conditioned on dense per-column adjustment outputs from our proposed planar BA module, which is based on a single-step Levenberg Marquardt (LM) optimizer. BADGR is trained to predict camera and wall positions while minimizing both reprojection errors, where two iterative processes, i.e. non-linear optimization and performing layout generation, are learned jointly with view-consistency and reconstrution losses. See section below for architecture details.

Planar Bundle Adjustment

Our planar bundle adjustment (BA) minimizes reprojection errors between predicted floor boundaries from image-based models and projected wall positions, adjusting wall translations along their normal direction and corresponding camera poses. Before optimization, image columns from different images are grouped by global wall instances, with each wall observed by multiple image columns and its floor boundary modeled as a line. Compared to point-based feature matching methods, which often lack robust matching features with sub-pixel accuracy from wide-baseline indoor environments, our planar BA performs feature matching at the global instance level for greater robustness and utilizes a larger portion of image columns during optimization.

Learned Global Constriants & BADGR

Layout-structural constraints, such as wall adjacency and collinearity, are crucial for accurate reconstruction, as sparse captures often limit visual overlap between images. Heuristic-based constraints struggle to generalize and may not apply to all scenes. BADGR leverages a denoising diffusion model to learn these constraints without complex regularization. It conditions on the planar BA mechanism, producing more accurate scene refinements than planar BA alone. The diffusion model serves as posterior sampling at each BA step to improve accuracy and efficiency.

BADGR inherits the transformer-based layout generation model from HouseDiffusion. Dense per-column planar BA guidance is restructured and compressed into guidance embeddings, along with coordinate and metadata embeddings for each wall and camera, which are then used as inputs to the transformer engine. The model is trained to predict epsilon to x0. In other words, BA guidance provides the gradients of adjustment, while the transformer makes the final prediction on the refined layouts and poses, ensuring view consistency and maintaining global constraints at each denoising step.

Compared to a guided diffusion model like PoseDiffusion, where guidance is simply added to the predicted adjustment, BADGR learns to combine global layout-structural constraints with the objective of maximizing view consistency, trained with an additional reprojection loss. The model avoids conflicting flows, unlike guided diffusion, as shown below. Our quantitative results show that BADGR is more accurate than both the guided diffusion model and the BA-only model, requiring 5X fewer iterations to converge compared to the BA-only model.

BADGR trains exclusively on 2D floor plans with simulated floor boundaries generated on-the-fly during training, simplifying data acquisition, enabling robust augmentation, and supporting variety of input densities.